Powering AI Centers with AI Spines

Leaf-spine architectures have been widely deployed in the cloud, a model pioneered and popularized by Arista since 2008. Arista’s flagship 7800...

As more and more modern applications move to hybrid or public clouds, the placement of these applications strains network infrastructure. It only makes sense to leverage the massive investments of public cloud providers. The need for public clouds to interact directly with data center resources requires the distributed deployment of cloud applications and appropriate networking design for DCI (Data Center Interconnect). This goes beyond the typical data replication and management issues in the 1990-2000’s, where several tunneling solutions centered on the concept of a “pseudo-wire” emulating point-to-point Ethernet links over wide area networks emerged. Many of these initial older tunneling mechanisms unfortunately, (such as EoGRE, EoMPLS, EoATM) did not scale well. They were cumbersome to deploy, complex to troubleshoot and did not deliver the fault isolation between data centers, especially at mega-scale locations.

The bottom line is that a new paradigm is required to allow any-to-any scale across sites for cloud-networked compute and storage resources.

Key Considerations for Cloud Interconnect

With the rapid scale and advances in cloud networking in 2015-2020, what are the impacts and requirements for networking to support seamless auto-scaling of these key cloud applications?

Cloud internetworking places new demands and criteria on the network. The network must be:

Cloud Internetworking Options:

The auto-scaling of resources is becoming possible in the cloud in both intra-clouds and inter-private and -public clouds. As a result, cloud interconnect is morphing, and many technology options are emerging that require a thoughtful designer's toolkit to demystify the puzzle of IP subnets, VXLAN extension and DWDM optical transport across multiple data centers. Three important choices can be considered

1. Layer 1 Spine Transit:

Data centers can be interconnected using 10/40/100GbE. Ethernet can run on top of dark fiber (due to distances xWDM wavelengths). These can work across Ethernet and be either statically or dynamically negotiated using LACP (IEEE 802.3ad) protocol. Dark fiber or xWDM wavelength can be used for back-to-back connectivity between routers and also be secured via encryption options.

2. Layer 2 over Layer 3 Spine Interconnect :

The foundation of the Arista pioneered L2 over L3, is Virtual eXtensible Local Area Network (VXLAN), an open IETF specification designed to standardize an overlay encapsulation protocol, capable of relaying Layer 2 traffic over IP networks. It is the inherent ability to relay unmodified Layer 2 traffic transparently over any IP network that makes VXLAN an ideal and intuitive technology for network interconnection. The key is to get started with deployment scenarios in order to span public and private cloud internetworking.

3. Layer 3 Spine Router-SRD

Routing based on BGP is the most popular option today. However, demand for large table routers can get expensive and be limited in 40GbE and 100GbE density. Spine routing combined with BGP Selective Route Download (SRD) allows learning and advertising BGP prefixes without installing them in the hardware. The filtering for installation is through the route-map semantics and filtered routes flagged as inactive in the RIB (Routing Information Base). With SRD, routes are no longer constrained by the platform limits and can relay much larger numbers of prefixes to peers.

Introducing 1-2-3 Spine Options for Cloud Connect

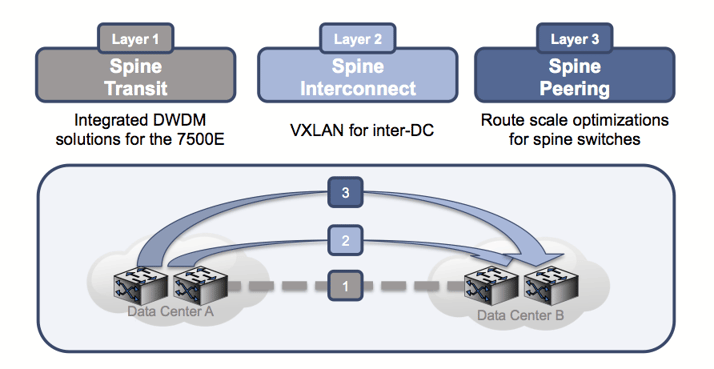

At SC15, Arista is proud to enhance our industry-leading Spine solutions with the Arista 7500E. Key highlights are shown in the figure below:

Figure L1/2/3: The three options for Spine Internetworking

Figure L1/2/3: The three options for Spine InternetworkingL1. Spine-DWDM Transit: Arista’s 7500E flagship Spine can directly connect into single mode fiber with embedded 100GbE DWDM integration and optional secure encryption for long distance (from hundreds to thousands of kms) transits between 7500E spines bringing both consolidated security and cost-efficiency across data centers.

L2. Spine-VXLAN Interconnect with VXLAN: Simplicity of network-wide VXLAN-based Spine with VXLAN for L2 over L3 integration between workloads, for enhanced virtual-physical visibility based on CloudVisionTM VCS, not only within the intra-data center but spanning across the data center.

L3. Spine-Peering: A resilient and highly available Spine with plenty of east-west bandwidth, without the classical router deficiencies of oversubscription, high price and low port-density, provides L3 BGP routing with SRD optimization and leveraging the ever-increasing capabilities of merchant silicon.

Arista’s cloud interconnect solutions offer security, simplicity, efficiency, scale and flexibility. It represents the industry’s first Spine choices at L1/L2 and L3 with uncompromised performance and highly available cloud-network interconnect. I am pleased to see Arista Spines in the forefront of this exciting step from cloud networking to cloud internetworking!

I always welcome your comments feedback@arista.com

Reference:

Leaf-spine architectures have been widely deployed in the cloud, a model pioneered and popularized by Arista since 2008. Arista’s flagship 7800...

The explosive growth of generative AI and the demands of massive-scale cloud architectures have fundamentally redefined data center networking...

/Images%20(Marketing%20Only)/Blog/VESPA-Launch-Blog.jpg)

The modern enterprise is navigating a profound transformation. The shift to the 'all wireless office' and 'coffee shop type networking', fueled by...